At 9:58 pm on March 18, 2018, Elaine Herzberg was killed as she walked her bike across a street in Tempe, Arizona. She was 49, homeless and may have been battling addiction. The vehicle that struck her was a Volvo XC-90 SUV outfitted by Uber’s Advanced Technology Group to be self-driving.

I write this more than 6 years and 8 months after the accident. Elaine Herzberg is all but forgotten, and Uber’s dreams of a fleet of self-driving robotaxis, ended.

At the Scene of the Crime

I am in Tempe, Arizona, on business, and after two days of meeting, I make my way out of town on an overcast Saturday morning. I am seeing quite a few Waymo robotaxis in the area. It is as if Waymo has snuck in to fill the void left by Uber. I am reminded of the unfinished business of the Uber accident coverage. I feel compelled to visit the scene of the Uber accident. I had only covered the accident from afar with a series of articles. I had never checked my assertions against the official report on the accident by the National Transportation Safety Board (NTSB), nor had I reported on the aftermath.

I had initiated coverage with the sincere belief that self-driving technology could save us from running into each other and the hope that a single death would not make the public pull the plug on self-driving projects, of which many had sprouted all across the U.S. Almost 38,000 people died in the U.S. the previous year (2017) according to the National Highway Safety Administration.

In my 2018 reporting, I pored over the accounts in the local news and watched the videos from cameras inside and outside of the Uber vehicle released by Tempe police for my series. I came to realize this was not a story of Luddites wanting revenge against machine owners or of technology companies insisting they can be better than humans despite failures, but a story of failures of one technology company.

Who to Blame?

Uber settled a lawsuit brought on by Elaine Herzberg’s husband and daughter very quickly. CNN said a settlement this soon after a fatal crash is unusual in a report dated just 11 days after the accident. Then Uber shuttered its self-driving program and moved out of town, possibly avoiding criminal prosecution.

The victim’s family then went after the city of Tempe, seeking $10 million for having a pathway on the median but no crosswalks or lighting. It is not known how or if the case was settled, but cities usually have ironclad immunity in place and can usually pass the burden of proof to victims.

Volvo was quick to clear itself of any wrongdoing. According to the NTSB report, Volvo took it upon itself to run 20 simulations, varying the speed and angle of the victim, to determine if its own automatic braking and evasive action disabled by Uber would have prevented or mitigated the accident. In 17 cases of those cases, Volvo would have been able to avoid Herzberg altogether. In the other 3 cases, it would have hit her at speeds of less than ten mph — instead of the actual fatal speed of 39 mph.

Had the Volvo been left to its own devices, Elaine Herzberg would still be alive.

A Convenient Culprit

That leaves “operator” Rafaela Vasquez as the remaining suspect.

For regulatory compliance, self-driving cars run tens of thousands of miles, initially with two operators in the front seats. The primary job of the operator on the passenger side is to monitor the operator at the controls. By the time of the accident, Uber had gone to single-operator configuration and made it the operator’s job to take control of the vehicle if needed — or, as we found out 5 years later when Vasquez pled guilty — to take the blame if they didn’t.

Uber was able to video Vasquez looking down towards a smartphone for a full 6 seconds before the accident only to look up “about 1 second before the crash.” However, the ambiguity of blame kept Vasquez in legal limbo for 5 years until she finally pled guilty to “endangerment” in July of 2023. You can read the details of the case here in Wired. She is currently serving 3 years of supervised probation.

Last Steps

I reconstruct Elaine Herzberg’s last footsteps on Earth and imagine tracing her path from a parking lot underneath an overpass as she crosses the southbound lane of Mill Avenue. She is walking her bike, which she is using to convey some, if not all, her belongings in two white plastic bags.

She stepped onto a median with an X-shaped crosswalk that provided a smooth passage for her and the bike she was walking over the relatively rough ground and scrubby vegetation. But retracing the path offers a surprise. Instead of a smooth walkway, there are loose, broken rocks. The city of Tempe has done this to discourage the X-shape as a walkway.

The signs that tells people to cross at the intersection are still in place, but I wonder if anyone who had come this far would go back the way they came and go 380 feet to the intersection.

I have to imagine the city of Tempe saying, “We put up signs” when the victim’s family sued them.

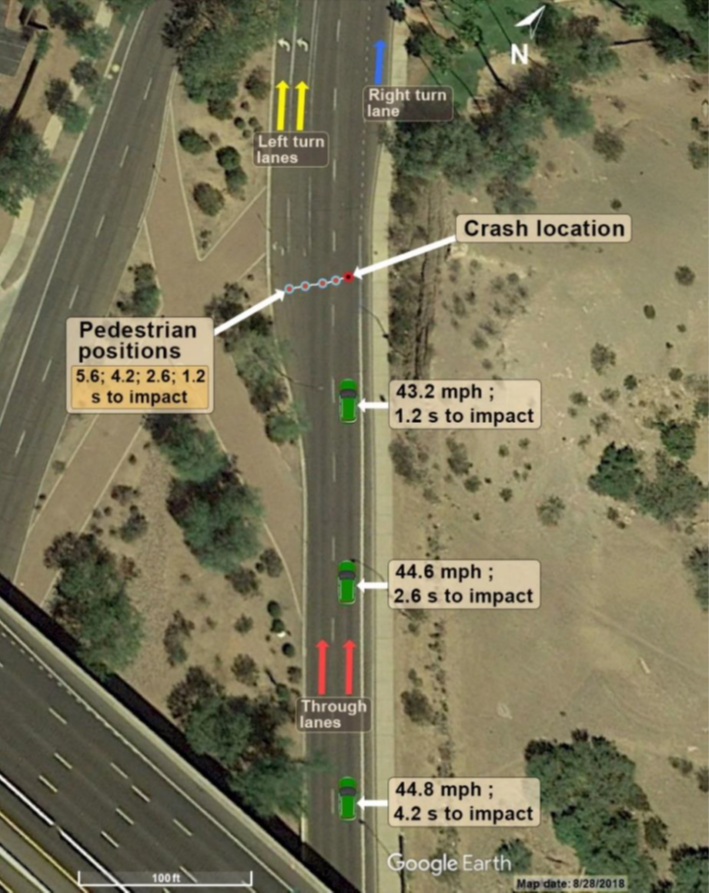

Erzberg steps off the median curb to cross 4 lanes of northbound traffic. She makes it across two turn lanes and the left through lane. As she is in the last lane, she looks up, startled by the lights of the Uber vehicle bearing down on her. It’s too late.

Automation Complacency

My trip to Tempe was part of a 1500-mile road trip from my home in the Bay Area, which also included San Diego. Over long spells of straight-line driving, for which Arizona is known, there were many a time when I felt as if I could have taken a break. I did not watch videos, but there were times when a hand left the wheel and my attention was elsewhere. My vehicle (Mercedes E400 with Level 2 autonomy: automatic braking, lane centering and adaptive cruise control) inspires that kind of confidence.

It’s a false sense of security. Automation complacency, with humans in the loop mostly in a monitoring role in highly automated systems, has a habit of not paying attention. The NTSB has examined automation complacency in Level 2 automation and concluded it to be the cause of two fatal crashes.

If Level 2 autonomy can make me inattentive, I wonder how much more inattentive would have been operators in Uber self-driving vehicles with Level 4 autonomy. Level 4 autonomy allows vehicles to go point to point or loop without any human intervention whatsoever. Did Uber ATG (Advanced Technology Group) not know about automation complacency and expect lone operators not to lapse into inattentiveness or seek distraction?

It is a question the NTSB answers in its conclusion: “…the Uber ATG did not adequately recognize the risk of automation complacency and develop effective countermeasures to control the risk of vehicle operator disengagement, which contributed to the crash.”

To be continued…