A clean-sheet design is often presented as a good thing. But is it really? It is more often that an engineering team tasked to make the next product has to start from an existing design. Let’s say the next product is an electric vehicle. Does it not make sense to start with the suspension, seats, body in white, chassis, etc., from an existing vehicle? Why reinvent the wheel?

I am reminded of an aerospace company CTO who told me about the horror of engineers designing a whole product from scratch — only to discover in midstream that another division was building the exact same product.

Reinventing the wheel is real. It happens all the time. Why? Because an enterprise’s departments and divisions each store their data in silos, in warehouses or server farms (the modern-day equivalent) in different programs, file formats and databases.

Of course, this problem could be avoided if we put all our data in a central location (the cloud) and use one app to read it all. But can we all do that? Many engineers, especially those who work for military agencies, defense contractors and super-secretive consumer product companies (think Apple), are not allowed to take any data or prototypes outside their walls. And how much of an undertaking would it be to feed all that data into one app?

Enterprise Search

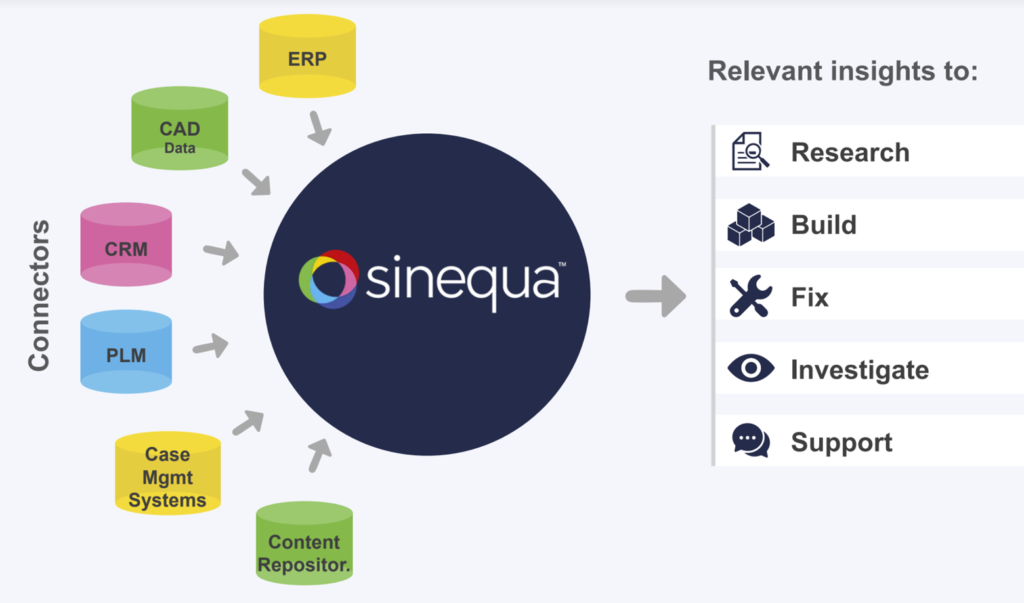

For the problem of data silos, one software company, Sinequa, offers a solution. Leave the data where it is and connect to it using a search engine with a natural language interface. That’s right, like ChatGPT, only different. ChatGPT may have read the world’s data, but Sinequa has read your company’s data.

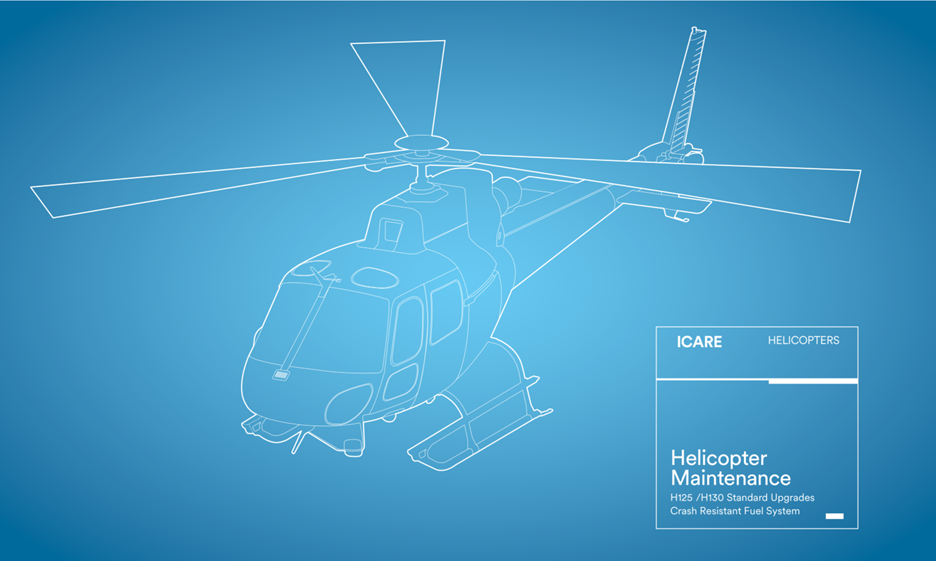

Sinequa offers another use case, that of Airbus Helicopters. Airbus, the parent company, had already been using Sinequa for enterprise search. The helicopter division installed Sinequa One Cloud to help reduce the load on its 23 support centers, which collectively get 30,000 requests annually. Neither Sinequa nor Airbus say how long it took to index all the data, but when it was done, customers could get part and maintenance information on a part simply with natural language commands.

This was much faster and, for the user, easier than alternative solutions, such as entering everything into an enormous database, which would have left its use for only those with training on it. SQL queries, anyone? — instead of using natural language queries.

Companies have often tried to consolidate their data and have it read by PDM and ERP systems, but it can take months for a big company to feed all the documents into them, downtime, and days and weeks before everyone gets up to speed.

The Sinequa method has all the data stay in place and searches through it. There is no program to learn, no massive conversion effort.

Siemens Also a Customer

In the world of manufacturing, no company is bigger and has a more diverse product line than Siemens. The German conglomerate has over 200,000 employees. Customer support of so many products, current and outdated, can rely on finding the correct information from over 20 million scattered and far-flung technical documents. To help a beleaguered tech support staff, Siemens has integrated Sinequa’s Gen-AI into its Siemens Industry Online Support (SIOS) portal. Search time for the correct information has decreased 10 to 30% for the tech support staff, by Sinequa estimates. Their system is now able to handle over 200 million customer inquiries annually for customers, partners and employees, and Siemens has benefited from not having to build an additional 12 call centers, saving the company an estimated $1.9 million.

Customers who use SIOS are able to get their information more often and quicker, resulting in less instances a staff member has to get involved.

Enter GenAI

GenAI, short for generative artificial intelligence, is a generic term for AI that is able to create new content, whether it be words or pictures, based on what it has learned from old content. Two types of genAI, “AI for design and engineering” and “AI for Product Lifecycle,” have been climbing up the Gartner Hype Cycle as companies, analysts and media start to realize there is more to genAI a public infatuation than a natural language interface, can and should be applied to help sort a company’s data.

Sinequa is mentioned by Gartner on the GenAI for Product Lifecycle along with, strangely enough, the Big 4 CAD vendors (Autodesk, Dassault Systèmes, PTC and Siemens). There is no pure enterprise search firm on “AI for Product Lifecycle” list.

Sinequa (from the Latin “sine qua non,” which means “without which not” or “something essential”) was doing AI before AI was fashionable, says Xavier Pornain of Sinequa, currently wearing an SVP of Sales hat but has been with the company since it got its name. We call on Xavier to give us a demo of Sinequa’s capability.

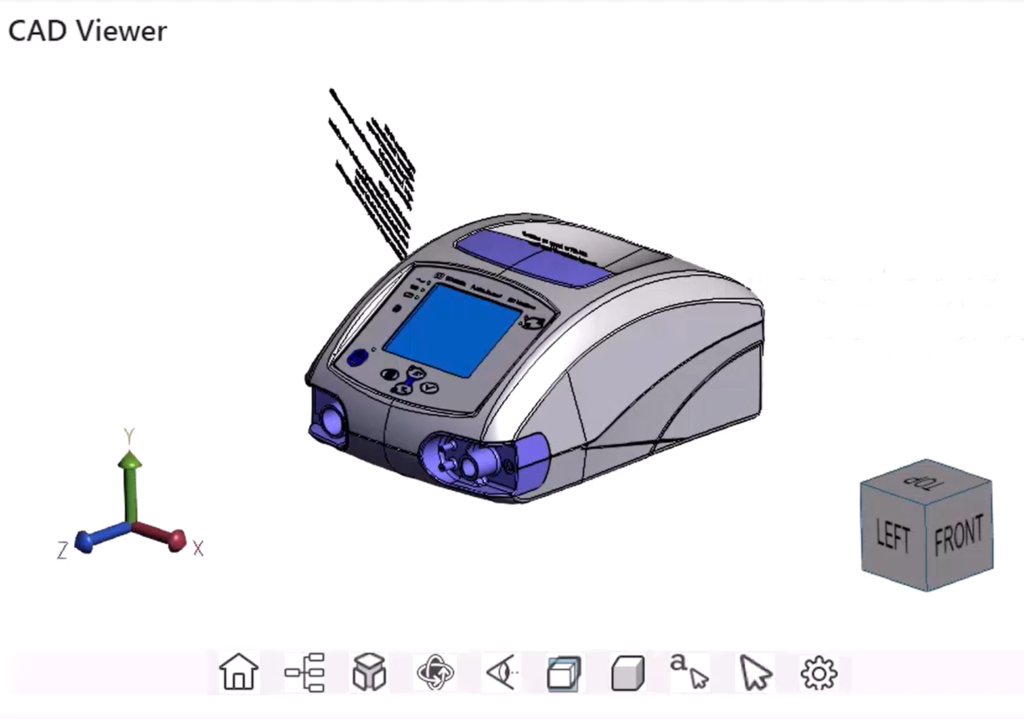

Immediately in the demo, the ease with which Sinequa fields natural language questions is apparent. May it forever banish SQL queries and arcane PLM commands to oblivion! Xavier merely has to ask in English what he wants and Sinequa answers. It makes me long for CAD programs to work the same way. Instead of having to learn the language of the CAD program, for example, DIMLINEAR in AutoCAD, why can’t I ask my CAD program to “dimension this?”

Sinequa cannot search by CAD shape but can extract metadata, such as GD&T information, part numbers, bills of material, etc. Sinequa can read more 350 file formats, but most important to engineers, it can read all of the major PLM files and most, if not all, the major CAD formats to extract the metadata.

For a super-secretive company, Sinequa’s application can run on the company’s server or a private cloud.

How Does it Work?

Sinequa reads everything your company has created. The company has built over 200 connectors and deciphered 350 formats by their count. Because of that, I can function as a digital assistant. Sinequa’s points out the advantages of its GenAI Assistant compared to a human assistant (to which I’ve added one of my own): it is never tired, never sassy and never calls in sick. Also, GenAI has read everything your company has produced and remembers all of it.

We presume Sinequa’s GenAI will show proper discretion. We would not want it to show off how much it knows to the rank and file, serving up HR info, exposing executive bonuses, much less divulging state secrets. Sinequa assures us this is not going to happen, that they treat security as paramount, and that their system recognizes permission set by enterprise apps and honors them.

Sinequa’s is designed to read and process vast amounts of data quickly. How long will it take to set up, though? The company makes no mention of setup time, but no doubt it would depend on the size and complexity of the dataset. Millions of documents and records should be fine, says Sinequa. But ill-formed data that requires cleanup or files and databases for which Sinequa is not accustomed – though the odds of the latter are small since Sinequa has built over 200 connectors and deciphered 350 formats — may cause delays.

Companies with more than one PLM in operation will rejoice at being able to ask questions about all the company’s data, PLM, PDFs, Word and Excel files, technical documentation, etc., for example, show me the latest information about a part.

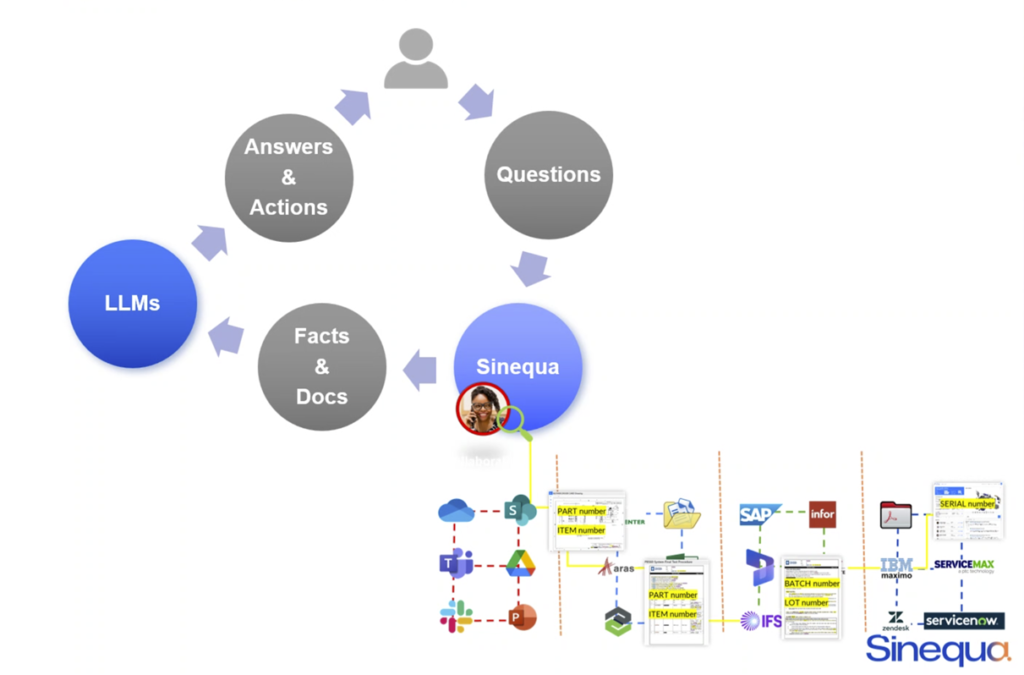

Sinequa “leverages” the publicly available or private LLM of your choice, whether it be ChatGPT, Gemini, Perplexity… whatever. It does not have a LLM of its own. If you prefer ChatGPT, Sinequa will create a custom Assistant that uses ChatGPT as its engine.

The combination of Sinequa’s search technology and an LLM like ChatGPT ought to enable it to be more nuanced in its search and have more contextually relevant responses.

Here’s how Sinequa handles input and output with an LLM:

A user inputs a question in a natural language. Sinequa runs this question through its neural search to better understand the user’s intent in the context of that user and augments the query. Presumably, Sinequa has learned something about this user in the setup and continues to learn. For example, knowing the user is a design engineer, a question about a washer would yield information on a fastener, whereas ChatGPT might consider washing machines on their own. The augmented query then extracts relevant information from all the applicable company data sources (not data out into the wild).

To the LLM output, Sinequa adds citations so you know where the answers are coming from, allowing users to check sources for accuracy and relevancy.

Conclusion

LLMs, by their nature, can condense vast amounts of information into concise, easy-to-understand summaries. For example, in a research or clinical trial setting, an LLM can summarize complex scientific data in plain English. Sinequa’s GenAI will provide the guardrails, keeping it trained towards company data and thereby reducing, if not eliminating, hallucinations, as unguided LLMs, fed a diet of publicly available information, are known to have.

If ChatGPT is a wild horse, foraging in dangerous places, dropping crap everywhere and prone to hallucination, think of Sinequa putting a harness on the horse, throwing a saddle over, giving it oats — and the riders don’t have to clean up after it.

This blend of Sinequa’s search technology with LLMs like ChatGPT creates a powerful tool for enterprises, transforming how users access, understand, and act on their vast data repositories.

About Sinequa

Sinequa has been doing semantic search forever – in Internet years, that is. Initially, it was just a research group operating in a small Paris office. The researchers may have had the earliest intention of making computers understand our language rather than us having to learn theirs. Alexandre Bilger acquired the company in 2002, which had, early on, established itself with cutting-edge enterprise search with semantic search, the type of search that, among other things, completes your search phrase a la Google.

The company received its first round of financing, getting $5.19 million at the beginning of 2007. Realizing that more gold lay in North America, the company set up a New York City office in 2014. A Paris-based private equity firm didn’t mind eyes towards America and kicked in $23 million in 2019.

Sinequa was on a roll — only to be drowned out by the din over ChatGPT. The hype over LLMs, with their inherent unreliability and propensity for wrong answers, may have left most executives disillusioned with LLMs in general. CIOs knew LLMs were based on the world’s data, much of it of dubious value. How much of Reddit forums, Wikipedia, Facebook, X or Twitter can you trust? It is now up to enterprise search companies, such as Sinequa, to convince them there is treasure to be found.

Sinequa adapting to harness LLMs (including ChatGPT) is a smart move, and adding guide rails, focusing on company data even smarter. Now to convince companies that there is a treasure in those data silos and warehouses if only you know where to dig.