What has Greg Mark been up to since he sold his Markforged 3D printing company for $2.1 billion? Many things, one of which is developing Backflip, an AI-assisted design engine. After receiving $30 million in funding, Backflip announced that it has emerged from stealth mode.

In the announcement were claims that you could give Backflip a 2D image (a photo, sketch, illustration) and it would create a 3D model. Best of all, it could make a 3D shape from natural language prompts.

That would be genius, I thought. I admit to attributing genius to those who agree with me. I had been proposing text-to-shape to all who would listen. They said it couldn’t be done, not with large language models. LLMs, like ChatGPT, that are only able to give text answers. Math still eludes LLMs, so don’t expect calculations or shapes which are mathematically defined. Even if they could do math and shapes, the amount of prompting would be so lengthy and laborious that you could have created the part in CAD sooner.

But Greg Mark may not have been paying attention. He imagined an AI that produced a 3D shape when it was little more than a notion in the mind of a designer or engineer.

How Does it Work?

After two years of the world in an AI fever (ChatGPT was released in November 2022), after personally pleading for CAD companies for AI for designers and engineers and being told it couldn’t be done, I couldn’t get to the Backflip.ai site fast enough.

Backflip couldn’t have been more welcoming. All it requires is an email address to set up an account. For an unspecified trial period, it is free to use. You have unlimited usage (credits). Clearly, the intention is to get attention and users while the company ramps up features and capabilities and works out the kinks.

The program runs on the cloud through a web browser. I used Chrome. There is nothing to download. Consequently, you can use it immediately and on any device.

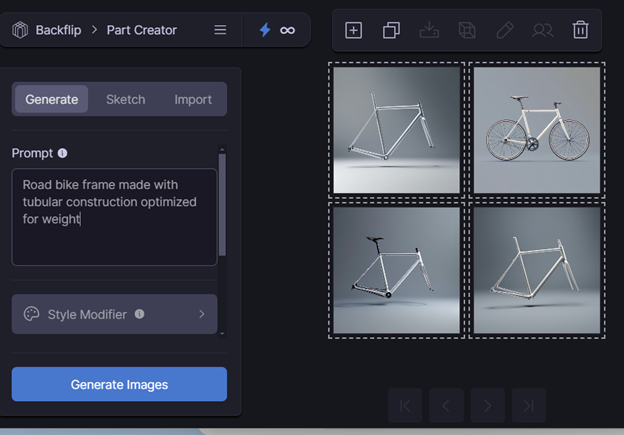

The interface is clean and uncluttered, with a text box to accept a prompt and buttons for importing images or sketching. Most of the screen is dedicated to the display of images and shapes. This is not a programmer’s tool that has just emerged from the lab. In fact, if all Backflip had to do was make rendered images, it could pass for a finished finished product.

I get started by asking for a simple shape with minimal prompting. Like “Make me an L-shaped bracket with four mounting holes.”

Clearly, a lot of part detail is not supplied. This matters not to Backflip. Like a retriever, Backflip is off and running. It fills in the gaps in the master’s commands. Fish or fowl, size, bent, extruded or cut from plate? Eager to please, Backflip makes assumptions. In seconds, it has fetched four possible angle brackets. Are any of them to your liking? If not, I am ready to go again. It’s not even panting.

As it turns out, one bracket is close to what I had imagined.

What just happened? I have to pause to reflect. Could this be the next design revolution? CAD had promised to help us design, but all it does—all it has ever done—is wait, its screen blank, until we figure the shape ourselves and only then does CAD deign to draw it.

But with Backflip, you can keep clicking on the “generate images” button to create as many shapes as your heart desires. I imagine that with increased popularity, Backflip will turn off the free supply. Enjoy it while you can.

All four suggested shapes are 2D images at this stage, but select one of them, and you can turn it into a 3D part, which can be inspected from any angle without even clicking, though not zoomed into. You can download the part in STL format for 3D printing or in OBJ (3D rendering), GLB (AR and VR) and PLY (3D scanning) formats.

Is it too greedy to ask Backflip to convert directly to popular CAD formats?

Backflip has been innovating with AI, creating what it says is a whole new category of AI, one that thinks in 3D.

“AI language models capture how we think, vision models capture how we see, and Backflip is creating foundation models that capture how we build,” said cofounder David Benhaim. “We’ve invented a novel neural representation that teaches AI to think in 3D.”

The technology developed for Backflip yields “60x more efficient training, 10x faster inference and 100x the spatial resolution of existing state-of-the-art methods,” according to Benhaim.

What’s Next?

Backflip for brackets? Done. Clearly, Backflips aims higher: nothing less than providing a “kernel for building the real world,” says Benhaim in the announcement. It’s real 3D, with all its realism and utility, not the fanciful 2D images today’s text-to-image programs, stable diffusion or Gaussian splats.

It was all too convenient for established CAD giants to say ChatGPT and other LLMs would be useless for shapes, and text-to-shape programs would never amount to anything more than playful curiosities.

Backflip has flipped that script.

The $30 million in funding was co-led by NEA and Andreessen Horowitz, AKA a16z. Angel investors include Kevin Scott, CTO of Microsoft and cofounder of LinkedIn; Rich Miner, Android founder and AI futurist; and Ashish Vaswani, one of 8 authors of the seminal “Attention is All You Need” research paper paper credited with the birth of LLMs.