NVIDIA CEO Jensen Huang delivered the first keynote at CES 2025 and set the stage for the future of AI. With continuing advances in GPU hardware, the chips that most AI runs on these days, NVIDIA reaffirmed its position as the hardware leader in the tech industry.

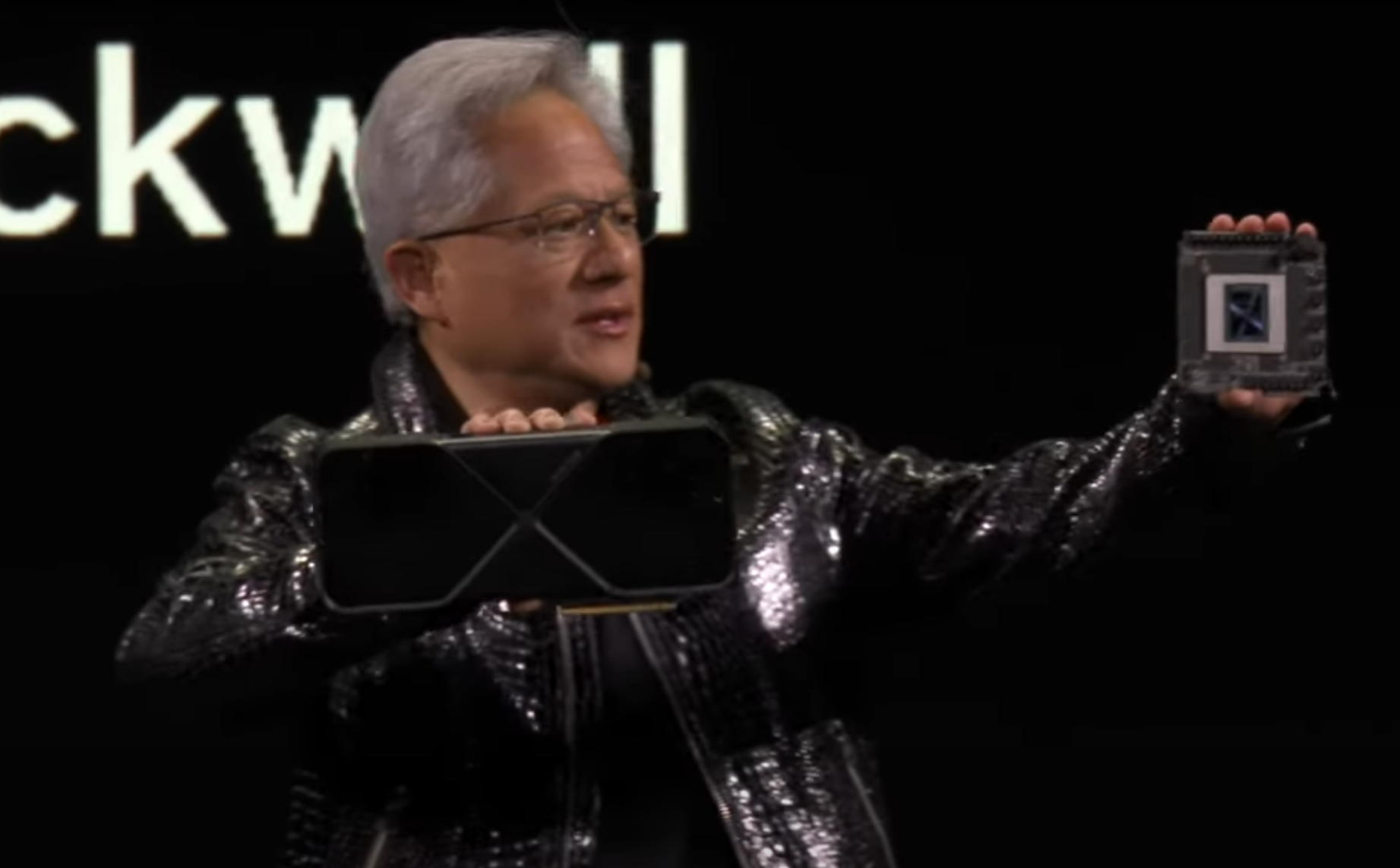

Huang first announcement was the GeForce RTX 5090, the “most powerful graphics card NVIDIA has ever developed,” perhaps paying homage to NVIDIA’s beginnings as a maker of graphics cards for gamers. Built on the Ada Lovelace 2 architecture, the RTX 5090 introduces significant advancements in performance and efficiency and may indeed raise the bar for gaming and content creation.

“The GeForce RTX 5090 is not just an upgrade; it’s a revolution for gamers and creators alike,” Huang declared. “It blurs the line between the virtual and the real, making experiences more immersive than ever.”

Graphics Imagined Mostly, Some Computed

Huang emphasized the rapid advancement of artificial intelligence, stating that AI is progressing at an “incredible pace. He outlined the evolution of AI from perception AI—understanding images, words and sounds—to generative AI, which creates text, images, video and sound.

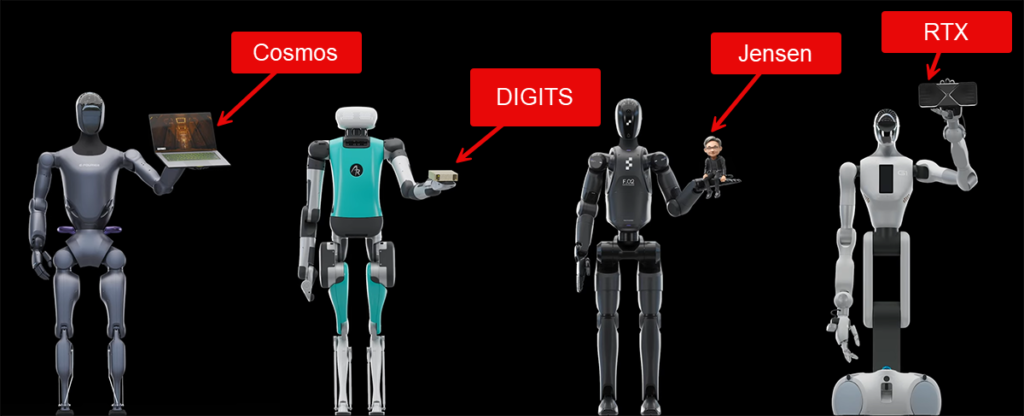

Huang introduced the concept of “physical AI,” describing it as AI that can perceive, reason, plan and act but is deeply rooted in the physical world. NVIDIA’s GPUs and platforms are central to enabling breakthroughs across industries, including gaming, robots and autonomous vehicles, said Huang (more on that later).

Huang underscored the exponential rate of data creation.

“In the next couple of years, humanity will produce more data than it has produced since the beginning,” he said.

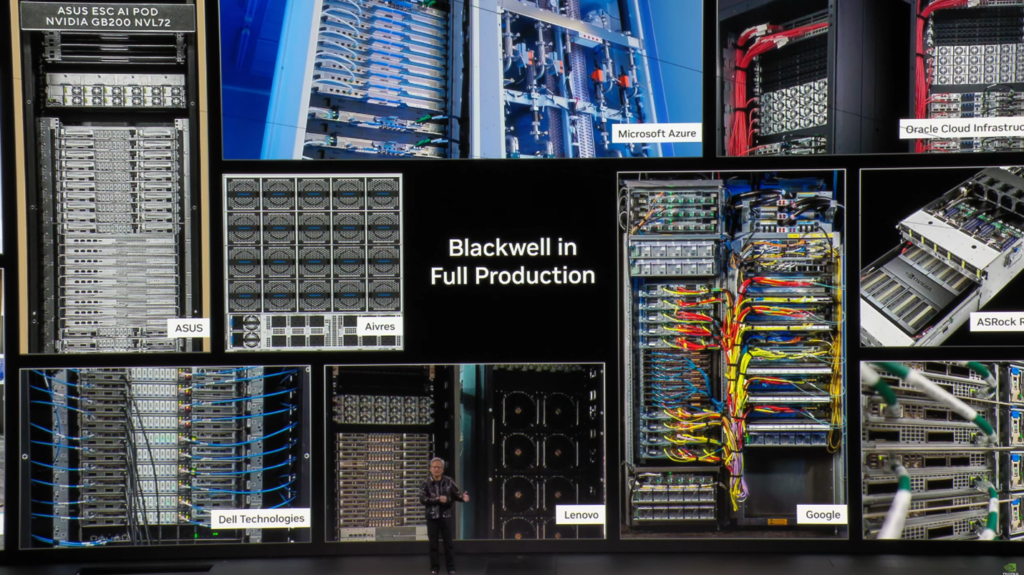

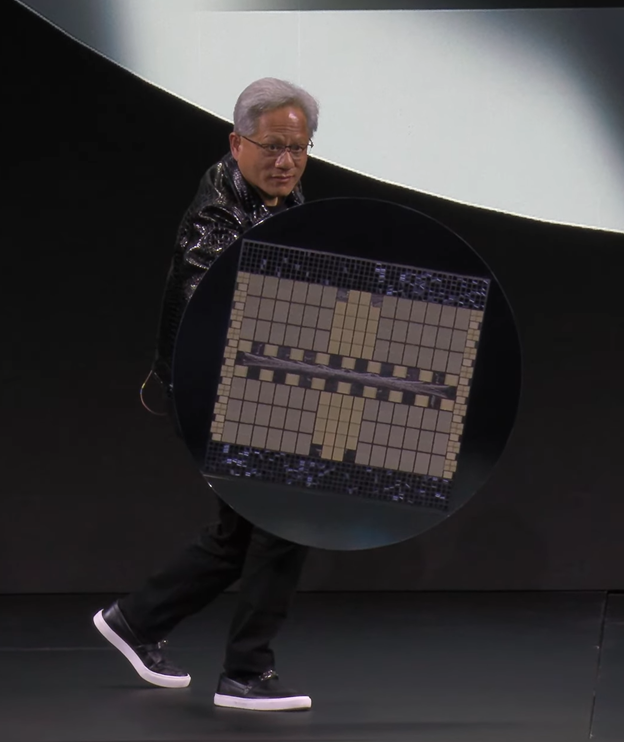

Huang announced that the company’s latest generation AI processor series, Blackwell, is now in full production. and claimed that every major cloud service provider has systems up and running with Blackwell and showcased systems from 15 computer manufacturers at the event. Huang emphasized that Blackwell is the engine of AI, bringing significant advancements to PC gamers, developers, and creatives.

A Token Investment

Huang told of how his new GPUs, which manage better performance and use less energy, will allow data centers to make more money. Not only would it cut cooling costs, a major expense for data centers, but they will enable data centers to generate more AI tokens—a critical metric for monetizing AI services.

NVIDIA’s latest Blackwell-based GPUs, such as the RTX 5090 and the Grace Blackwell NVLink 72 systems, deliver significantly better energy efficiency so data centers can achieve the same computational output with far less electricity.

Or datacenter could run at full tilt and generate more AI tokens— the key unit of output in the AI-driven economy. Tokens, the blocks of AI-generated text or other outputs, can be produced faster and at lower costs.

For example, suppose a data center previously generated 1 billion tokens daily at a specific energy cost. An improved efficiency might now allow them to generate 1.5 billion tokens using the same energy, directly increasing revenue potential.

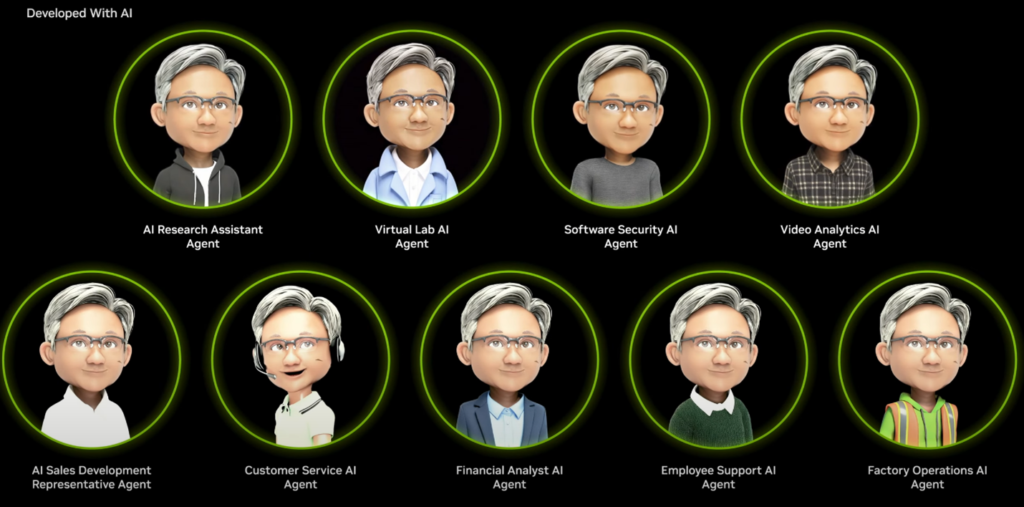

Agentic AI

“The age of AI agentics is here,” said Huang, signaling a transformative shift in artificial intelligence. He described agentic AI as a “multitrillion-dollar opportunity” that will revolutionize work across industries. Huang emphasized that AI agents are becoming the new digital workforce, capable of reasoning, planning, and acting autonomously.

- NVIDIA Cosmos: A platform designed to advance physical AI by providing new models and video data processing pipelines for robots, autonomous vehicles, and vision AI.

- AI Foundation Models for RTX PCs: These models feature NVIDIA NIM microservices and AI Blueprints for crafting digital humans, podcasts, images, and videos, enabling the development of specialized AI agents to automate tasks.

Companies will develop the agents into assistants for many of their roles, predicts Huang. Perhaps the best example is developers, for whom AI-code generation is already being widely used.

There are 30 million developers who could use an AI agent, says Huang.

Foundation for the World

Huang thinks the next big AI (after LLMs, which work only with text) is physical AI, which works with physics. In the keynote, Huang introduced NVIDIA’s Cosmos, the world’s first World Foundation Model, which uses physical AI.

This foundational knowledge allows Cosmos to create simulations that reflect real-world behavior, making it highly suitable for industries like robotics, autonomous vehicles, and industrial AI.

Cosmos will then be able to model the physical world. It has learned all its physics not from sitting in class or reading books but from “20 million hours of video” of objects in motion, interactions between objects, and physical environments. This ought to allow it to predict interactions and motion for industries such as robotics and autonomous vehicles where real-world data is limited or costly to capture, factory automations, warehouse operations and their optimization.

Huang announced that Cosmos will be freely available, open-licensed and available on GitHub.

The Cosmos World Foundation Model is a sophisticated AI system that ingests and understands multimodal data, including text, images, and video, to generate realistic simulations and predictions about the physical world. Unlike LLMs that process text-based tokens, the World Foundation Model generates “action tokens,” enabling it to predict and simulate real-world behavior based on actual Newtonian physics.

Huang discussed autonomous vehicles (AVs) as one of the most significant applications of physical AI and NVIDIA’s advanced computing platforms. He outlined the current state of AV technology, NVIDIA’s contributions, and how physical AI plays a critical role in testing, training, and advancing the capabilities of autonomous systems (more on that later).

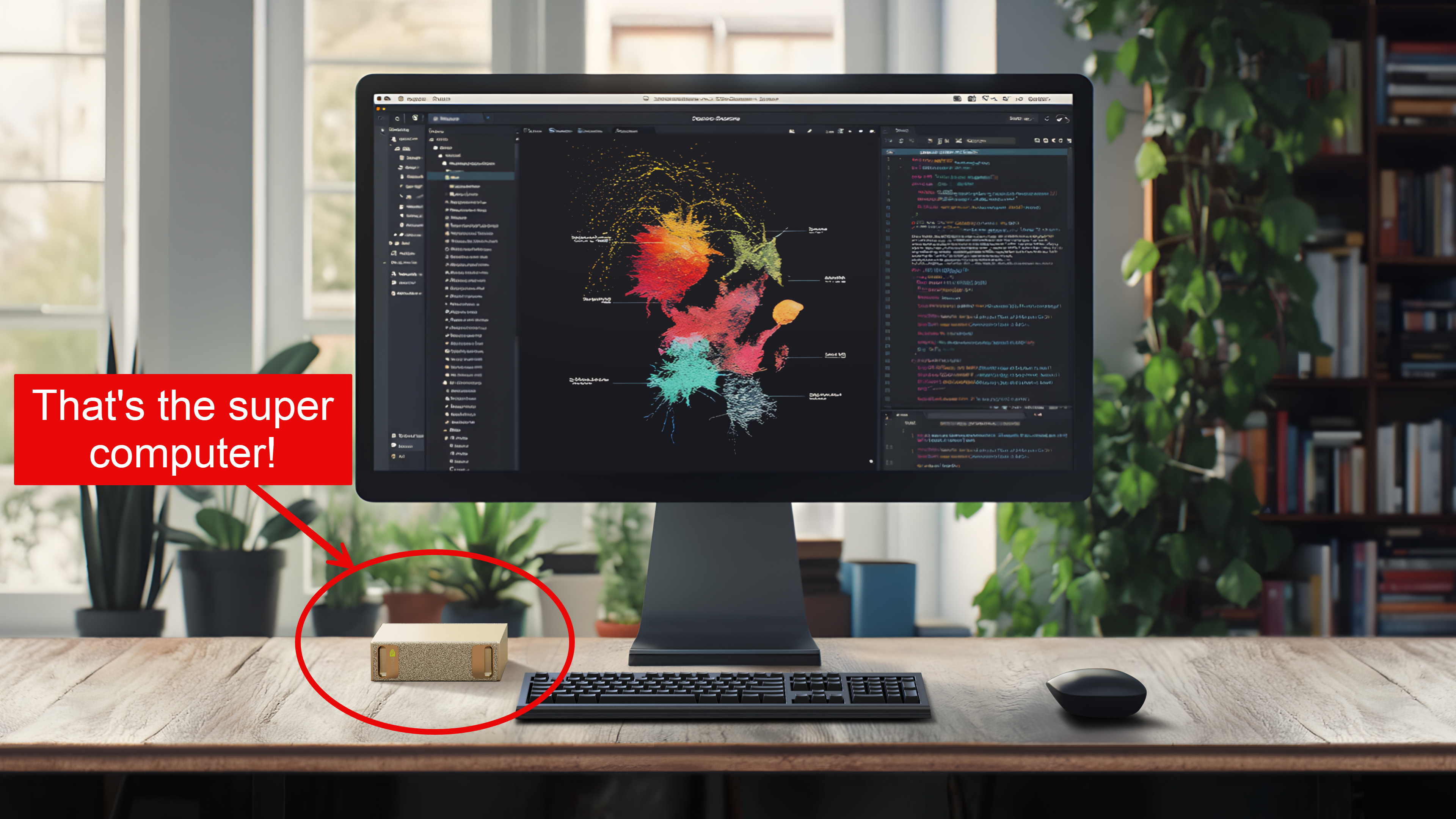

DIGITS – Your Own Personal Supercomputer

Huang introduced DIGITS, a personal supercomputer that will be available later this year (May). The diminutive computer (see image above) is the productization of the project called “Deep Learning GPU Intelligence Training System” (DIGITS), which NVIDIA shortened to DGX and introduced as a graphics platform of the same name in 2016.

DIGITS is meant to be used for the development of AI applications. Users can access the whole of the NVIDIA AI software library for experimentation and prototyping, including software development kits, orchestration tools, frameworks and models available in the NVIDIA NGC catalog and on the NVIDIA Developer portal. Developers can fine-tune models with the NVIDIA NeMo framework, accelerate data science with NVIDIA RAPIDS libraries and run common frameworks such as PyTorch, Python and Jupyter notebooks, according to NVIDIA.

That NVIDIA is making a desktop supercomputer that can run Windows applications (with WSL 2, a Windows subsystem for Linux machines) DIGITS should be a wake-up call, if not a fire alarm, for every PC manufacturer. Here is the 2nd most valuable computer hardware company no longer content to make chips and boards for PC manufacturers but is stepping out to make its own PCs. It’s as if makers of jet engines decided to make airplanes. No specifics, like benchmark comparisons, were given at CES but since DIGITS will be operating at a scale and speed of DGX systems, thanks to the advanced architecture of the GV110 chip and its integration with NVIDIA’s AI software stack, we expect it to blow the doors off any traditional Intel-based PC running AI-assisted software… and what software will not be within the year?

An AI-Based Windows?

Huang outlined a vision for a future version of Windows that would be deeply integrated with AI capabilities. He referenced the revolutionary impact of Windows 95, which introduced multimedia APIs that transformed the software development landscape. He likened this transformative potential to what he envisions for the future of AI on Windows PCs.

Huang introduced the concept of “generative APIs,” which would allow developers to integrate AI directly into applications on Windows PCs. These APIs would enable:

- Generative AI for Language: Advanced natural language processing for creating text and responding to queries.

- Generative AI for Graphics: Tools for producing 3D models, animations, and video content.

- Generative AI for Sound: Capabilities for audio synthesis and manipulation.

These APIs would extend the functionality of traditional computing by bringing AI-assisted tools directly into everyday applications.

Currently, NVIDIA is using Windows Subsystem for Linux (WSL) 2, which provides a dual-operating-system environment optimized for AI development.

Autonomous Vehicles

NVIDIA’s automotive vertical business is currently at $4 billion and is expected to grow to approximately $5 billion in fiscal year 2026, according to NVIDIA.

Huang highlighted that autonomous vehicle, after years of development, are now becoming mainstream, citing the success of companies like Waymo, Tesla, and Aurora (AV trucks). He characterized the AV industry as likely to become the first multi-trillion-dollar robotics market, driven by the massive demand for self-driving cars and trucks.

Each year, 100 million cars are built, said Huang. A billion cars are on the road globally, collectively driving a trillion miles annually. Autonomous capabilities will revolutionize how these vehicles operate.

NVIDIA is working with major automakers like Mercedes, BYD, Toyota and startups like Zoox and Waymo to develop next-generation autonomous systems.

Autonomous vehicles require a “three-computer solution” tailored for different stages of development and deployment:

- Training AI Models (DGX Systems) for training AV models using vast datasets and simulations.

- Simulation and Synthetic Data Generation (Omniverse & Cosmos) to create environments and situations to enable extensive tests before real-world deployment.

- In-Car AI Systems (Drive AGX Thor), VIDIA’s latest AI supercomputer for cars, processes massive amounts of sensory data, from cameras to LiDAR and radar, to make real-time driving decisions.

Thor offers 20 times the processing power of its predecessor, making it suitable not only for AVs but also for robotics and other high-performance applications.

Huang emphasized the importance of testing AV systems with synthetic data and simulations powered by physical AI. These technologies allow NVIDIA to simulate real-world driving conditions at an unprecedented scale and fidelity. NVIDIA’s Cosmos platform generates lifelike driving scenarios based on real-world data, including weather, lighting, and road conditions. This allows AV models to train on edge cases that are rare or dangerous to capture in real life. Using NVIDIA Omniverse, AV systems can simulate billions of miles of driving by replaying and altering existing driving logs. For example, recorded real-world footage of a drive can add rain and snow, change the time of day, increase traffic, and more. This would allow the AV industry to turn thousands of real-world drives into billions of simulated miles, amplifying the training data exponentially.

Huang stressed that the ability to simulate edge cases—such as unpredictable pedestrian behavior or hazardous weather—is critical for reducing risk with autonomous vehicles.

Huang concluded his keynote with a forward-looking statement about the transformative role of AI and computing in society. “We are entering an era where AI will become as ubiquitous as electricity,” he said. “From healthcare to entertainment, from scientific research to autonomous systems, NVIDIA’s technologies will drive the next wave of innovation.”