SAN JOSE, CA (GTC), Mar 21, 2025 – NVIDIA has unveiled NVIDIA Dynamo, an open-source inference software for accelerating and scaling AI reasoning models in AI factories while optimizing cost and efficiency.

As AI reasoning goes mainstream, every AI model will generate tens of thousands of tokens used to “think” with every prompt. Increasing inference performance while reducing costs helps service providers expand growth and create more revenue opportunities.

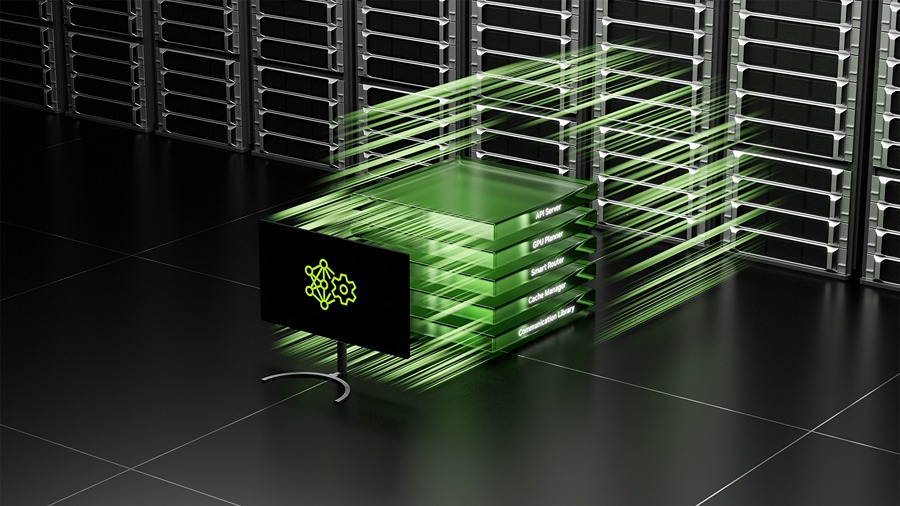

NVIDIA Dynamo is AI inference-serving software built to improve token revenue for AI factories using reasoning AI models. As the successor to the NVIDIA Triton Inference Server, it enhances communication across thousands of GPUs. It uses disaggregated serving to separate large language models’ processing and generation phases (LLMs) on different GPUs. This approach allows each stage to be fine-tuned independently and better uses GPU resources.

“Industries around the world are training AI models to think and learn in different ways, making them more sophisticated over time,” said Jensen Huang, founder and CEO of NVIDIA. “To enable a future of custom reasoning AI, NVIDIA Dynamo helps serve these models at scale, driving cost savings and efficiencies across AI factories.”

Using the same number of GPUs, Dynamo doubles the performance and revenue of AI factories serving Llama models on the NVIDIA Hopper platform. When running the DeepSeek-R1 model on a GB200 NVL72 racks, NVIDIA Dynamo’s intelligent inference optimizations also boost the number of tokens generated by over 30x per GPU.

NVIDIA Dynamo incorporates features that increase throughput and reduce costs to achieve these inference performance improvements. It adjusts GPU usage based on varying request volumes and types. The system can reassign, add, or remove GPUs as needed, and it identifies GPUs in large clusters that handle computations effectively. It also reduces costs by retrieving inference data to affordable memory or storage devices when required.

NVIDIA Dynamo is open source and supports PyTorch, SGLang, NVIDIA TensorRT-LLM, and vLLM to allow enterprises, startups, and researchers to develop and optimize ways to serve AI models across disaggregated inference. It will enable users to accelerate the adoption of AI inference, including at AWS, Cohere, CoreWeave, Dell, Fireworks, Google Cloud, Lambda, Meta, Microsoft Azure, Nebius, NetApp, OCI, Perplexity, Together AI, and VAST.

Inference Supercharged

NVIDIA Dynamo maps the knowledge that inference systems hold in memory from serving prior requests – known as KV cache – across potentially thousands of GPUs. It then routes new inference requests to the GPUs with the best knowledge match, avoiding costly recomputations and freeing up GPUs to respond to incoming requests.

“To handle hundreds of millions of requests monthly, we rely on NVIDIA GPUs and inference software to deliver the performance, reliability and scale our business and users demand,” said Denis Yarats, chief technology officer of Perplexity AI. “We look forward to leveraging Dynamo, with its enhanced distributed serving capabilities, to drive even more inference-serving efficiencies and meet the compute demands of new AI reasoning models.”

Agentic AI

AI provider Cohere is planning to power agentic AI capabilities in its Command series of models using NVIDIA Dynamo.

“Scaling advanced AI models requires sophisticated multi-GPU scheduling, seamless coordination, and low-latency communication libraries that transfer reasoning contexts seamlessly across memory and storage,” said Saurabh Baji, senior vice president of engineering at Cohere. “We expect NVIDIA Dynamo will help us deliver a premier user experience to our enterprise customers.”

Disaggregated Serving

The NVIDIA Dynamo inference platform supports disaggregated serving, which assigns the different computational phases of LLMs – including building an understanding of the user query and generating the best response – to different GPUs. This approach is ideal for reasoning models like the new NVIDIA Llama Nemotron model family, which uses advanced inference techniques for improved contextual understanding and response generation. Disaggregated serving allows each phase to be fine-tuned and resourced independently, enhancing throughput and delivering faster user responses.

Together AI, the AI Acceleration Cloud, is looking to integrate its proprietary Together Inference Engine with NVIDIA Dynamo to enable seamless scaling of inference workloads across GPU nodes. This also lets Together AI dynamically address traffic bottlenecks at various stages of the model pipeline.

“Scaling reasoning models cost effectively requires new advanced inference techniques, including disaggregated serving and context-aware routing,” said Ce Zhang, chief technology officer of Together AI. “Together AI provides industry-leading performance using our proprietary inference engine. The openness and modularity of NVIDIA Dynamo will allow us to seamlessly plug its components into our engine to serve more requests while optimizing resource utilization – maximizing our accelerated computing investment. We’re excited to leverage the platform’s breakthrough capabilities to cost-effectively bring open-source reasoning models to our users.”

NVIDIA Dynamo Unpacked

NVIDIA Dynamo includes four key innovations that reduce inference serving costs and improve user experience:

- GPU Planner: A planning engine that dynamically adds and removes GPUs to adjust to fluctuating user demand, avoiding GPU over- or under-provisioning.

- Smart Router: An LLM-aware router that directs requests across large GPU fleets to minimize costly GPU recomputations of repeat or overlapping requests — freeing up GPUs to respond to new incoming requests.

- Low-Latency Communication Library: An inference-optimized library that supports state-of-the-art GPU-to-GPU communication and abstracts the complexity of data exchange across heterogenous devices, accelerating data transfer.

- Memory Manager: An engine that intelligently offloads and reloads inference data to and from lower-cost memory and storage devices without impacting user experience.

NVIDIA Dynamo will be made available in NVIDIA NIM microservices and supported in a future release by the NVIDIA AI Enterprise software platform with production-grade security, support, and stability.

Source: NVIDIA

About NVIDIA

![]()

NVIDIA Corp. is an American tech company headquartered in Santa Clara, CA. Renowned for designing and manufacturing graphics processing units (GPUs), NVIDIA’s innovations have significantly impacted various sectors. The company’s products and services cater to industries such as gaming, where its GPUs enhance visual experiences; artificial intelligence (AI), providing high-performance computing solutions; automotive, contributing to autonomous vehicle technologies; and robotics, offering advanced AI perception and simulation tools. Over its more than three decades in business, NVIDIA has experienced substantial growth. In the fiscal quarter ending January 2025, the company reported record revenue of $39.3 billion and a net income of $22.1 billion. NVIDIA’s headquarters, designed to facilitate a flat organizational structure, emphasizes information flow and harmony between leadership and employees.