SUNNYVALE, CA, Jan 23, 2025 – Numem is present at the Chiplet Summit to showcase its high-performance solutions in chiplet architectures. By accelerating data delivery via new memory subsystem designs, Numem solutions are re-architecting the hierarchy of AI memory tiers to eliminate the bottlenecks that negatively impact power and performance.

The increasing demand for AI workloads and processors, including GPUs, is exacerbating the memory bottleneck. This issue arises because SRAM and DRAM are improving at a slower pace in terms of performance and scalability, limiting system performance. To address this, memory solutions with higher bandwidth and power efficiency are required, along with a reevaluation of traditional memory designs.

Numem is transforming traditional memory paradigms with its advanced SoC Compute-in-Memory solutions. Built on its patented NuRAM (MRAM-based) and SmartMem technologies, the innovations tackle critical memory challenges head-on. The result is a groundbreaking approach to scalable memory performance, built to meet HPC and AI application needs.

“Numem is fundamentally transforming memory technology for the AI era by delivering unparalleled performance, ultra-low power, and non-volatility,” said Max Simmons, CEO of Numem. “Our solutions make MRAM highly deployable and help address the memory bottleneck at a fraction of the power of SRAM and DRAM. Our approach facilitates and accelerates the deployment of AI from the data center to the edge, opening up new possibilities without displacing other memory architectures.”

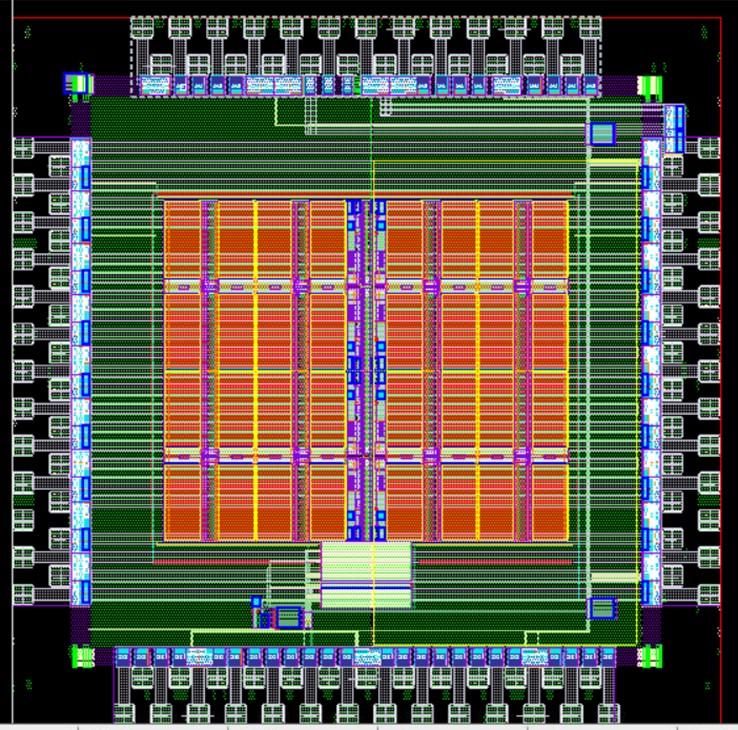

MRAM-Based Chiplet Solution

At the Summit, Numem is showcasing innovative chiplets – nonvolatile, high-speed, ultra-low power solutions that leverage MRAM to overcome memory challenges in chiplet architectures. Sampling is expected to begin in late Q4’25.

Key features and benefits include:

- Unparalleled Bandwidth: Delivers up to 4TB/sper 8-die memory stack, exceeding existing AI memoryHBM solutions.

- High Capacity: Supports 4GB per stack package, enabling scalability for demanding AI workloads.

- Nonvolatile with SRAM-Like Performance: Combines ultra-low read/write latency with persistent data retention, offering reliability and efficiency. Provides the scalability and power needed to address the demands of future AI and data-centric workloads.

- Power Smart: Game-changing power efficiency and AI Edge and Data Center based solutions with the ability to implement multi-state flex power functions (active/standby/deep sleep).

- Broad Application Compatibility: Optimized for AI applications across OEMs, hyperscalers, and AI accelerator developers to drive the adoption of chiplet-based designs in high-growth markets. Designed with standard industry interfaces such as UCIe to facilitate ecosystem compatibility.

- Advanced Integration: Complement other chiplet components (e.g., CPUs, GPUs, and accelerators).

- In-Compute Intelligence: Improves memory performance by handling data flow, optimizing read/write speeds, adjusting power settings, and enabling self-testing features.

- Proven Technology: Advanced memory subsystem IP based on proven foundry MRAM process. Offers radiation performance that mitigates exposure to soft errors.

Numem is also demonstrating its patented NuRAM/SmartMem technology, that achieves significantly faster speeds, lower latency and dynamic power consumption compared to other MRAM solutions. It reduces standby power by up to 100x compared to SRAM, with similar bandwidth, and delivers up to 4x faster performance than HBM while operating at ultra-low power.

Demonstrations will be given in Numem’s booth #322 on the show floor of the Santa Clara Convention Center from Jan 21-23, 2025.